I recently worked on a project where we needed to copy some large files from a specified library in SharePoint Online. In that library, there were several layers of folders and many different types of files. My goal was to copy files that had a certain file extension and a file name that started with a specific string.

I began by comparing the capabilities between Azure Data Factory and Logic Apps. Logic Apps allows me to start the process via a webhook. It also has a SharePoint online connector that provides easy AAD authentication. And there is a special operation that allows me to retrieve the properties (path, filename) of files without retrieving the files. This makes it easy to loop through the list of files that is already filtered to only the files I want.

Azure Data Factory does have a SharePoint Online connector, but you can’t use it to copy files. You must use the HTTP connector to copy files from SharePoint in Data Factory. And that requires you to make a web call first to get a bearer token to be used in the authentication for the source of the copy activity.

While the ADF options work, I thought I would go with the Logic App because it felt cleaner and easier for others to understand. That is until I tried to copy some large files and encountered the following error.

Http request failed as there is an error: 'Cannot write more bytes to the buffer than the configured maximum buffer size: 104857600.'.

It turned out there were some extremely large files to be copied from that SharePoint library. SharePoint has a default limit of 100 MB buffer size, and the Get File Content action doesn’t natively support chunking.

At that point I would have to figure out how to handle the chunking using an HTTP call. That made the Logic App no better than Data Factory for my purposes. And since I already had part of the larger process in Data Factory, I went back there to see how it handled chunking.

Copying a Subset of Files from SharePoint Online Using Data Factory

And it turns out you don’t have to do anything special in Data Factory to handle the chunking of the large files – it does that work for you.

So I set up a similar process to what I had in my Logic App in my ADF pipeline.

First, I used a Lookup activity to get the list of files. When you do a lookup against a SharePoint Online dataset, you can use OData filters to limit the data returned by the lookup. In my case this was necessary because I only wanted 144 of the items out of the list of 4300. Lookups can only return 5,000 rows, so I definitely needed to add filters to my query. Also, folders are considered an item in the data returned, so if you are looking only for files, you need to filter out folders (either by content type or by the name of the file).

The results of the lookup, returning one item per file, are fed into a For Each activity. Inside the For Each activity, I placed a Web activity to get the necessary bearer token and a Copy activity to copy the file to blob storage.

To get a bearer token, you need a service principal with the correct permissions in SharePoint. Then you make a POST to https://accounts.accesscontrol.windows.net/{tenantID}/tokens/OAuth/2.

You need the following header: Content-Type: application/x-www-form-urlencoded.

In the body of the HTTP call, you must include: grant_type=client_credentials&client_id={clientid}@{tenantid}&client_secret={secretvalue}&resource=00000003-0000-0ff1-ce00-000000000000/{SharePoint tenant}.sharepoint.com@{tenantID}.

Make sure to set Secure Output so your credentials aren’t written to the ADF logs. Preferably, you would store the client ID and client secret in Key Vault and use a web activity to look them up before getting the token.

You can then use the output from the web activity in your copy activity.

The Additional Headers should be populated with the auth token as shown below:

Authorization: Bearer @{activity('WEB_GetToken').output.access_token}

Since I don’t need to read the file contents, just copy it to blob storage, I used a binary dataset. I parameterized the dataset and linked service so my For Each activity could feed it the path and name of each file. This is because the lookup provides the path separately from the file name (which includes the file extension).

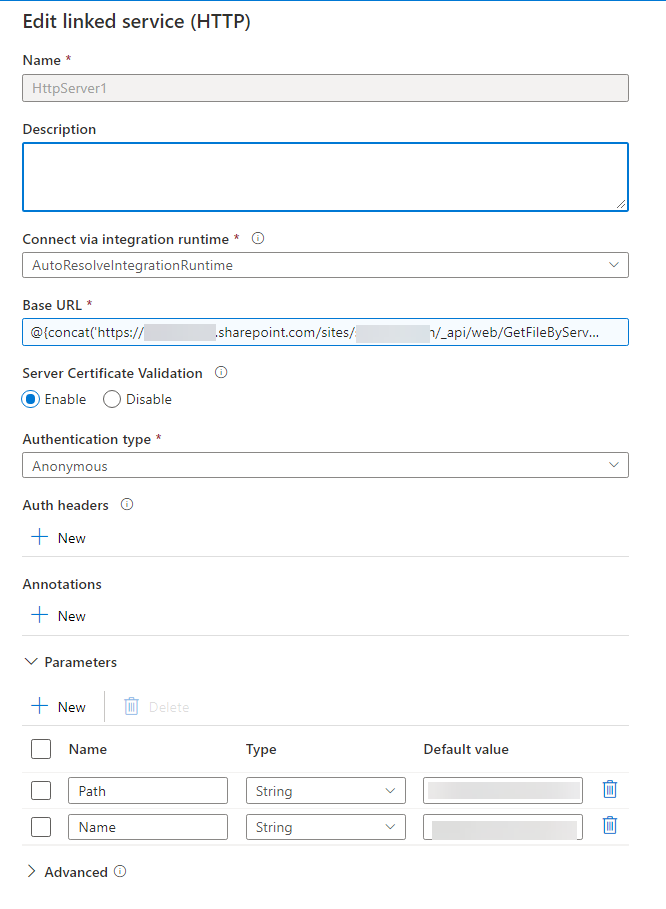

The linked service uses the HTTP connector. It has two parameters added: path and name.

The base url I used in the linked service is:

@{concat('https://{tenant name}.sharepoint.com/sites/{site name}/_api/web/GetFileByServerRelativeUrl(''',replace(linkedService().Path,' ','%20'),'/',replace(linkedService().Name,' ','%20'),''')/$value')}

If my file is located at https://mytenant.sharepoint.com/sites/site1/libraryname/folder1/folder2/folder3/myfile.CSV, the URL I need to retrieve the file is https://mytenant.sharepoint.com/sites/site1/libraryname/folder1/folder2/folder3/myfile.CSV')/$value.

So once I add my destination info to the Copy Activity, I can copy all the files retrieved from my lookup to blob storage.

A Few Tricky Things

Everyone’s SharePoint tenant is set up differently. Your url will vary based upon the configuration of your site collections/sites. When I tried this in my tenant, I didn’t have to include the “site/sitename” before “/_api/web…”. But I did in my client’s tenant when I was building this.

We granted permissions to the service principal at the site collection level to get this to work. If there was a way to grant lower permissions, we couldn’t figure it out. Here the XML we used when the SharePoint admin was granting permissions.

<AppPermissionRequests AllowAppOnlyPolicy="true">

<AppPermissionRequest Scope=http://sharepoint/content/sitecollection/web

Right="Read" />

<AppPermissionRequest Scope=http://sharepoint/content/sitecollection/web/list

Right="Read" />

</AppPermissionRequests>

Your auth token is valid for 1 hour. If you copy a bunch of files sequentially, and it takes longer than that, you might get a timeout error. That is why I moved my web activity inside the For Each activity. If you know it’s only a few files and they are quick, you should just get one bearer token and use it for all the files.

Lastly, be sure to set retries and timeouts appropriately. Remember that the default timeout for an activity is 7 days, and that is probably not desirable.

It took a lot of trial and error for me to get this working, as I couldn’t find many blog posts or docs about this. Special thanks to Bill Fellows for helping me through it. I sincerely hope this blog post helps someone avoid my struggles.