I had the pleasure of presenting a full-day pre-conference session on the Friday before SQLSaturday Austin-BI last weekend. I could spend paragraphs telling you how enjoyable and friendly and inclusive the event was. But I’d like to focus on one really cool aspect of my speaking experience: I had both closed captioning and sign language interpreters in my pre-con session.

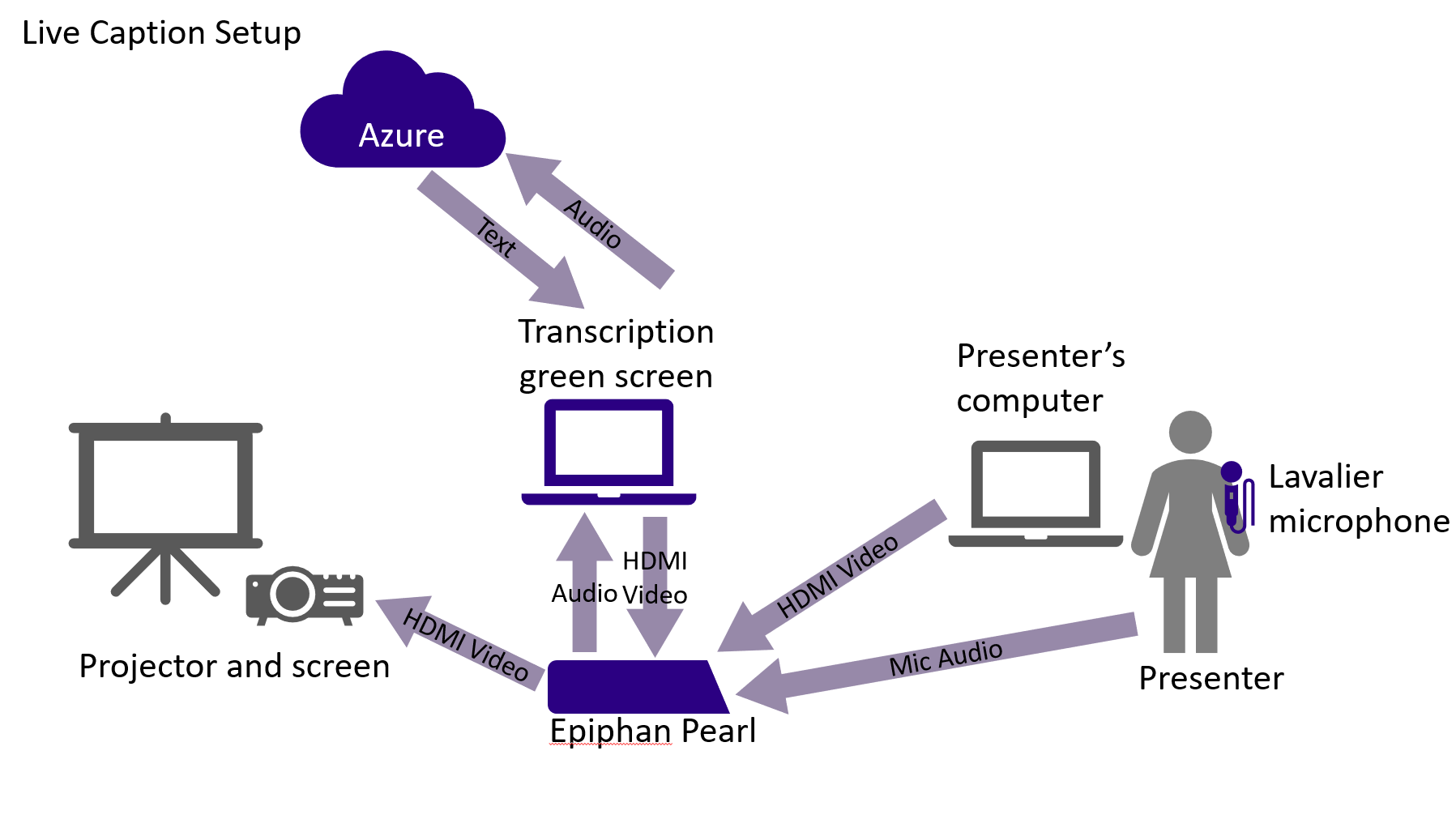

First, let’s talk about the captions. While PowerPoint does have live captions/subtitles, that only works when you are using PowerPoint. When you show a demo or go to a web page, taking PowerPoint off the screen, you lose that ability. So we had a special setup provided by Shawn Weisfeld (Twitter|GitHub).

How the Live Captions Worked

The presenter connects their laptop to the Epiphan Pearl with an HDMI cable so they can send the video (picture) from the laptop. The speaker wears a lavalier microphone, which sends audio to the Pearl. The transcription green screen computer takes audio from the Pearl, sends it to Azure to be transcribed using Cognitive Services, and overlays the returned transcription text on a green screen input that is sent back to the Pearl. The projector gets the combined output of the transcription text and the presenter’s computer video output.

You can see an example of what it looked like from my presentation on Saturday in the tweet below. There are lots more pictures of it on Twitter with the #SqlSatAustinBI hashtag.

Creating Accessible Power BI Reports @MMarie #sqlsataustinBi pic.twitter.com/BvSqJWCC4e

— Alicia Moniz (@AliciaMoniz) February 8, 2020

While this setup requires a bit more hardware, it worked so well! It took about 10 minutes to get it set up in the morning. As the speaker, I didn’t have to do anything but wear a mic. It transcribed everything I said regardless of what program my laptop was showing. There was very little lag. It seemed to be less than one second between when I would say something and when we would see it on the screen. While I try to speak clearly and slowly, sometimes I slip and fall back into speaking quickly. But the transcription kept up well. Some attendees said it was great to have the captions up on the screen to help them understand what I said when I occasionally spoke too quickly. The captions are placed at the top of the screen, above the image coming from my laptop, so I didn’t have to adjust my slides or anything to allow space for the captions.

The live captions were a big success. They helped not only people who had trouble hearing, but also those who spoke English as a second language and those who weren’t familiar with some of the terms I used and needed to see them spelled out.

Presenting With Sign Language Interpreters

This was my first time presenting with sign language interpreters to help communicate with my audience. Since the pre-con session lasted multiple hours, there were two interpreters in my room. They would switch places about every hour. They were kind enough to answer a few questions for me during breaks.

I asked them if it was difficult to sign all the technical terminology used and if they tried to study up on terms ahead of time. One of them told me that they don’t study the subject and they fingerspell all the technical terms. Most of my terms were spelled on my slides, and I saw the interpreter look at the slide to get the spelling. When someone asked a question about the font I was using, the interpreter asked me to spell it out, since it wasn’t written anywhere. I asked if having printed slides helped (I provided PDFs of the slides to the attendees at the beginning of the session). One of the interpreters told me no, because they were already watching the signer for questions and watching my slides and listening to me.

What I loved most about having the interpreters there was that the person using the service got to fully participate in the session. They asked questions and made comments like anyone else. And they participated in hands-on small group activities.

Check out this great photo of one of the interpreters in action during a small group activity.

Having ASL interpreters didn’t require any extra effort on my part. I didn’t have to practice with them beforehand or provide them with any of my conference materials. They were great professionals and were able to keep up with me through lecture, demos, small group exercises, and Q&A.

Sign language interpreters cost money. And they should – they provide a valuable service. In this case, the interpreters were provided by the State of Texas because the person using the service worked for the state government. Because this was training for their job, the person’s employer was obligated to provide this service. So we were lucky that it didn’t cost us anything.

While the SQLSaturday organizers were coordinating the ASL interpreters, they found out that there is a fund in Texas that can help with accessibility services when a person’s employer doesn’t/can’t provide them. It may not be the same in every state, but it’s definitely something to look into if you need to pay for interpreters for an event like this.

Make Your Next Event More Accessible

I have organized events, and I understand the effort that it requires. I’m so happy that Angela and Mike made the effort to make SQLSaturday Austin-BI a more inclusive event. I would like to challenge you to do the same for the next event you organize or the next presentation you give at a tech conference.

Your conference may not be able to afford the Epiphan Pearl (note: the original model we used is discontinued, but there is a new model) and the Azure costs. I’d like to see SQLSaturdays join together and purchase equipment and share across events – it would be great if PASS would help with this. If we can’t do that, we could always start small with the built-in capabilities in PowerPoint and work our way up from there.

It was a great experience as a speaker and as an audience member to have the live captions. And I was so happy that someone wanted to attend my session and was making the effort to sign up and request the ASL interpreters. I hope we see more of that in the future. But we need to do our part to let people know that we welcome that and we will work to make it happen.